Top 10 Design Tools For UX And UI (2025 GUIDE)

13/12/2022

991

Table of Contents

Selecting software for UX and UI design is never easy. You want to get something that enables you to flex the full extent of your creative muscle, but you also need a tool that will open your mind to new ideas and approaches you’d have missed.

And then there’s the issue of how well the tool coalesces with a team’s administrative procedures, its integration capabilities, and the returns on investment for each pricing plan, among other factors. But don’t worry, we will list the top ten UX and UI design tools to consider using in 2025 and highlight their standout attributes.

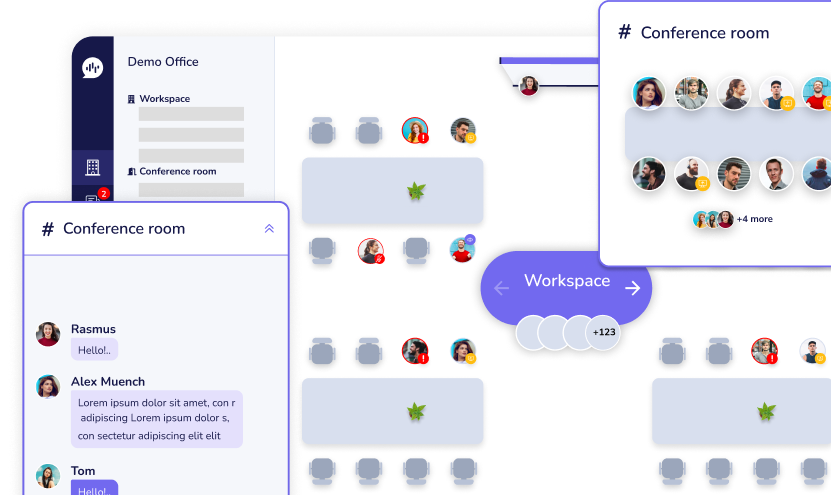

Sketch

Sketch is impressive because, thanks to custom grids, it allows you to easily adapt your UI designs to different target device screens and their respective dimensions. It will also let you easily reuse various components to maintain consistency in your designs, which is very important for branding.

Besides the presets and artboards, Sketch offers pixel-level accuracy with a snapping mode and smart guides, so your work has no blemishes. You’ll also benefit from its Boolean editable operations when introducing changes at different stages. Unfortunately, Sketch is only available on macOS, which complicates collaboration.

Source: Sketch

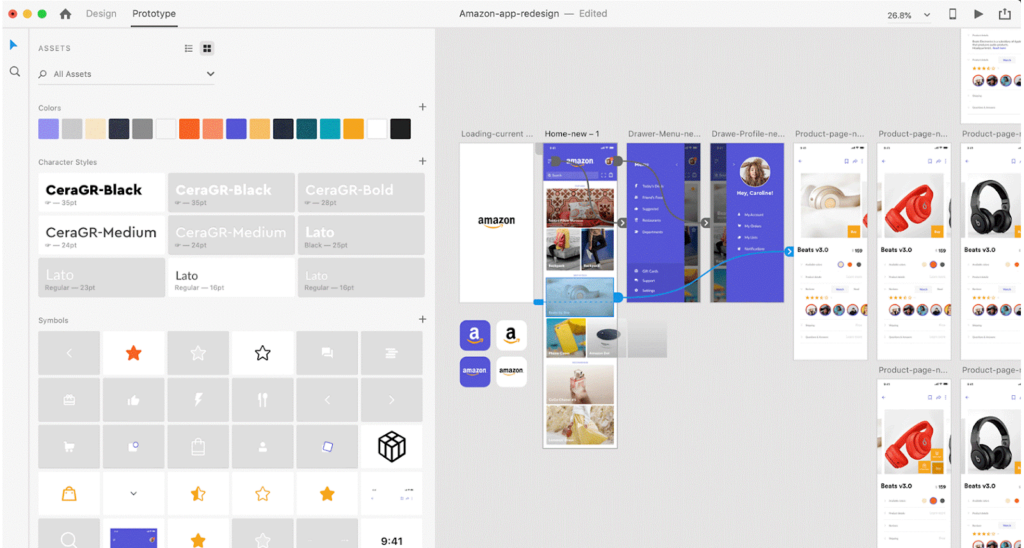

Adobe XD

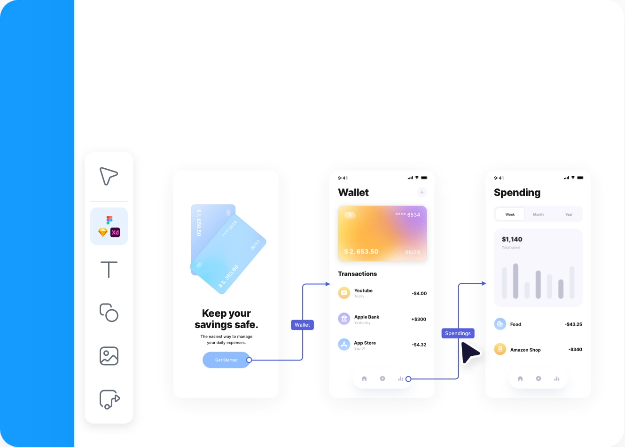

One standout feature of Adobe XD is the 3D Transforms, which allows you to represent different elements from specific perspectives (angles) and varying depths. This makes it ideal for designs intended for augmented and virtual reality systems.

Additionally, Adobe XD offers expansive prototyping capabilities, enabling designers to publish and share interactive designs. With multiple animation options for the smallest components and voice prototyping, you can quickly realize a lively design.

You’ll have a prototype you can speak to, one that speaks back and makes every action feel like an event of its own but still part of a family. Thanks to Adobe XD’s assortment of UI kits, this applies to Google Material Design, Apple Design, Amazon Alexa, and many others.

Source: Toptal

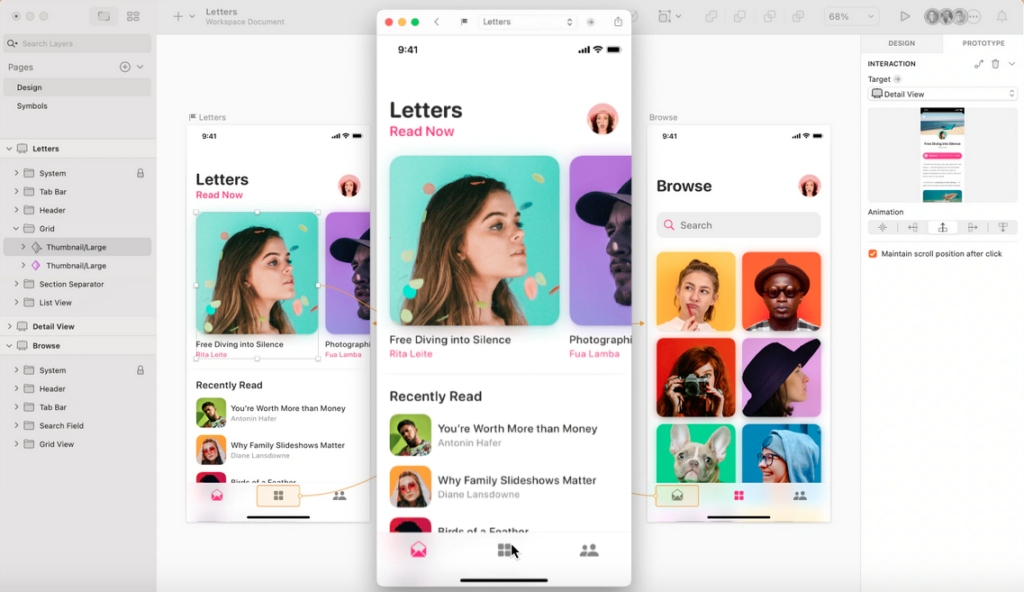

Figma

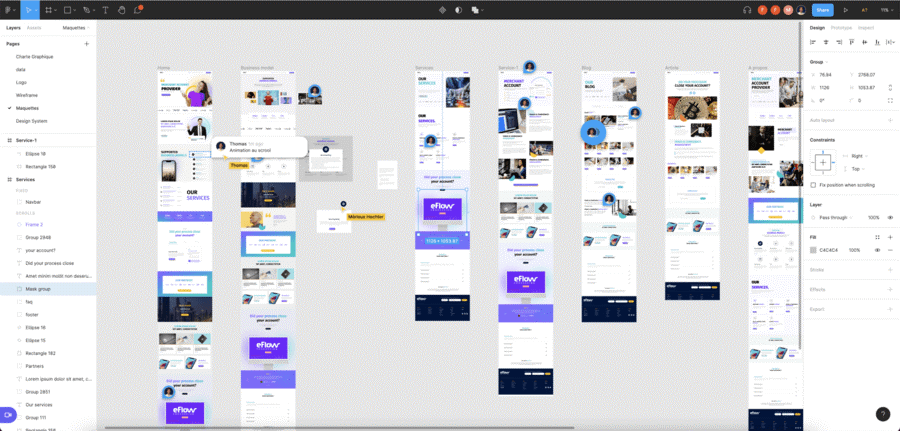

Figma’s browser-based wireframing capabilities make it a go-to tool for designers who want to quickly create the skeleton for their designs and share them with colleagues. It also enhances collaboration by allowing users to place comments in their wireframes and receive real-time feedback.

While Figma may seem like a tool best suited for presentations and brainstorming thanks to extensions like FigJam and its drag-and-drop approach, it allows you to convert wireframes into clickable prototypes to get a taste of the intended experience.

Source: Digidop

Balsamiq

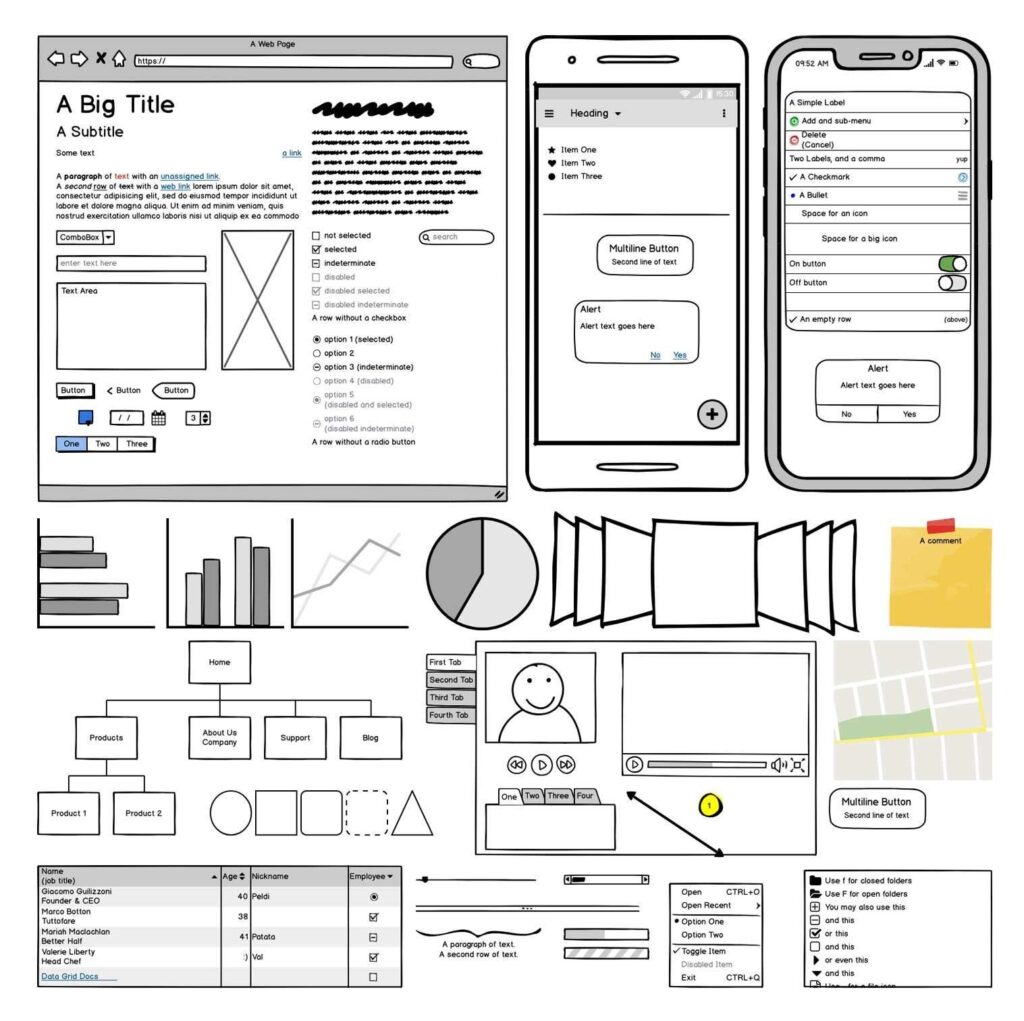

This tool offers a much leaner take on wireframing, going easy on the add-ons and keeping users focused on channeling their whiteboard or notepad workflow. However, it has numerous built-in components to drag and drop into your project’s workspace with minimal learning time. Lastly, Balsamiq works on both PC and Mac.

Source: Balsamiq

>>> Related articles:

- Differences In UX Demands Of A Desktop And Mobile App For A SaaS Product

- Atomic Design In Software Development

Overflow

Overflow helps you combine designs made in various tools, such as Adobe XD, Sketch, and Figma, to create coherent user flows when envisioning the journey through your app. You can also add device skins.

You can use different shapes and colors to lay out a process’s logic as you draw your user flow diagrams. Those viewing the diagram can easily follow it and see what happens when a particular condition is met and what the screen looks like. Overflow can also convert your prototype links into connectors in the diagram, so you don’t have to redo that work.

Source: Overflow

FlowMapp

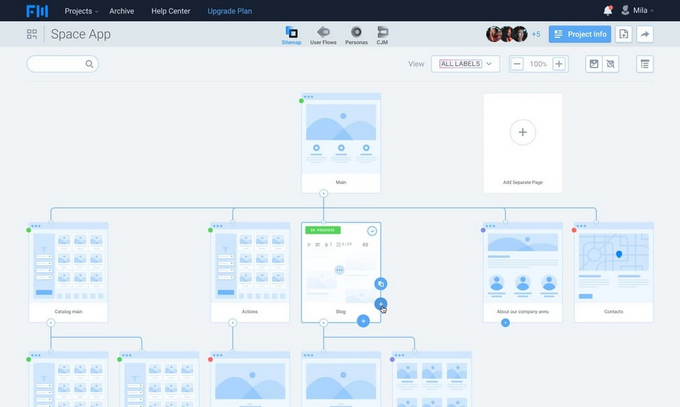

FlowMapp offers a more stripped-down approach to creating user flow diagrams. This makes it perfect for designers still in the strategizing phase who don’t have many complete screens to include in the diagram.

While it may seem rudimentary, FlowMapp can help you make important discoveries. For instance, some screens may need to be split, with one accessed using a button on another, while others need to be condensed into one because the functionality is highly related.

FlowMapp gives a more comprehensive view, so other stakeholders like copywriters and sales executives can contribute to the UX plan with a greater understanding of the opportunities and boundaries present in the journey. It’s great for choosing where to insert CTAs and additional messages, like warnings at checkout, to combat fraud or collect user feedback.

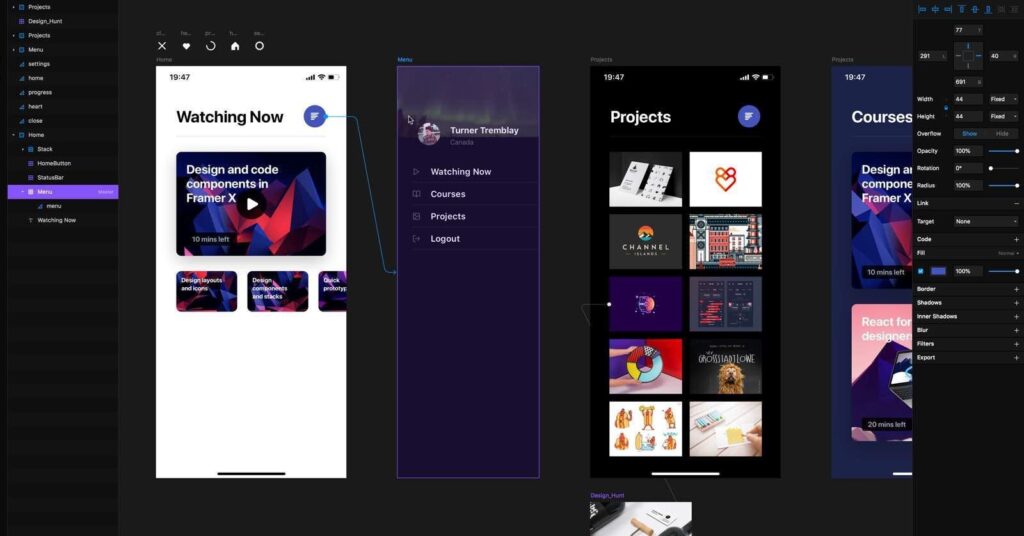

Framer

Framer’s code approach, origins, and compatibility with React suit designers focused on the latest web design technologies. Nonetheless, it offers more user-friendly UI design tools and usability testing features.

More importantly, Framer has several plugins that designers can use to embed media players, grids, and other elements into designs to capture content from services like Twitter, Snapchat, Spotify, Soundcloud, and Vimeo. It also has a variety of template categories, ranging from landing pages to startups, splash pages, photography, agency pages, etc.

Source: Goodgrad

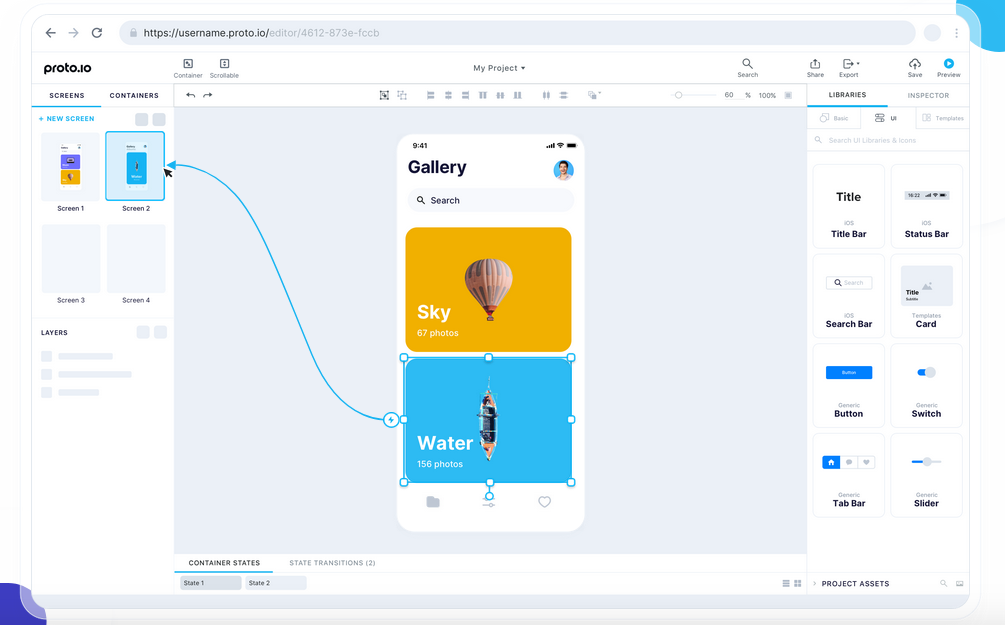

Proto.io

Thousands of templates and digital assets and hundreds of UI components. That is one of the starting points Proto gives you to make your designs come alive within your web browser. Secondly, you can start your prototyping journey by importing files from Adobe XD, Figma, Photoshop, and Sketch.

You’ll also be able to explore different results for touch events, play with many screen transitions, and utilize gestures, sound, video, and dynamic icons. Proto.io comes with mobile, web, and offline modes.

Source: Proto.io

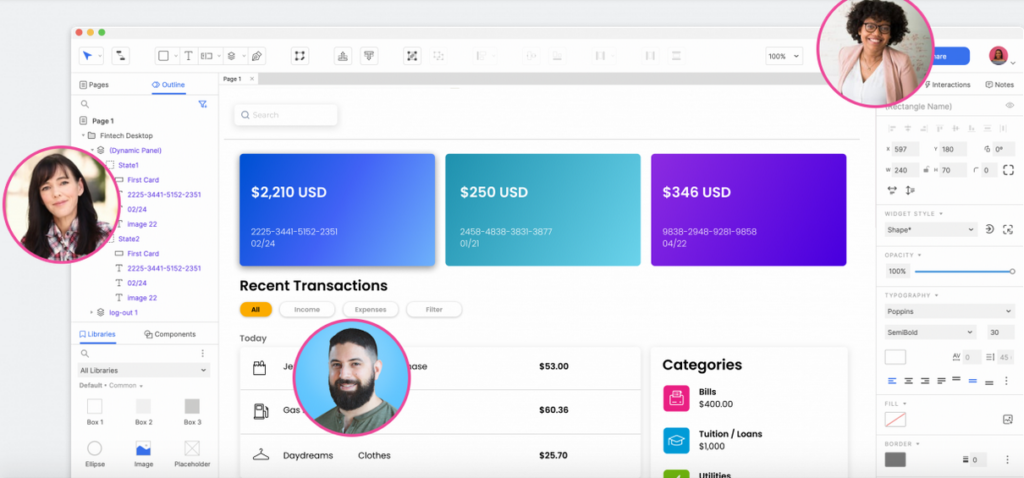

Axure

Axure helps you make prototypes easier to follow by inserting conditional logic. This tool also encourages documenting as you work on high-fidelity prototypes rich in detail. Coupled with the ability to test functions and generate code for handoff to developers, Axure enables team members to comb through work swiftly with minimal oversight, having ready releases much faster.

Source: Axure

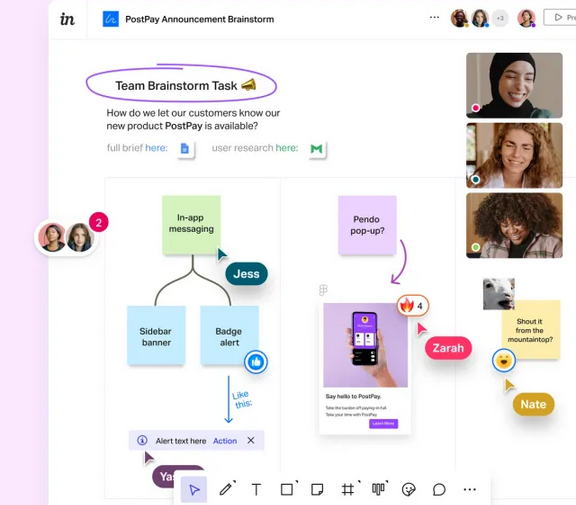

InVision

InVision incorporates digital whiteboarding into the journey to a working prototype, which makes it great for projects where a team wants to keep ideation running concurrently with actual design work for as long as possible.

It comes with a decent list of integration capabilities, ranging from project management tools like Jira and Trello to communication tools like Zoom and Slack. You can even hook up Spotify to provide a soundtrack for members doing freehand brainstorming.

Source: Invisionapp

Wrapping Up

Every tool has pros and cons, so always consider what phase of the project a specific tool fits into, how well it brings everyone together, and how much creativity it supports. While we’ve focused on Atomic Design In Software Development top ten picks, many other tools could dominate top UI design trends in 2025, such as Marvel, Origami Studio, Webflow, and more. For professional help in selecting the right UX and UI design tools, contact us for a free consultation.

Related Blog