Triggers and Events: How AWS Lambda Connects with the World

10/01/2025

1.02k

Table of Contents

Welcome back to the “Mastering AWS Lambda with Bao” series! In the previous episode, SupremeTech explored how to create an AWS Lambda function triggered by AWS EventBridge to fetch data from DynamoDB, process it, and send it to an SQS queue. That example gave you the foundational skills for building serverless workflows with Lambda.

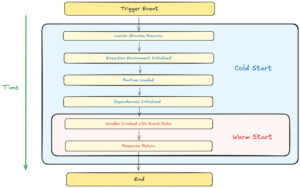

In this episode, we’ll dive deeper into AWS lambda triggers and events, the backbone of AWS Lambda’s event-driven architecture. Triggers enable Lambda to respond to specific actions or events from various AWS services, allowing you to build fully automated, scalable workflows.

This episode will help you:

- Understand how triggers and events work.

- Explore a comprehensive list of popular AWS Lambda triggers.

- Implement a two-trigger example to see Lambda in action

Our example is simplified for learning purposes and not optimized for production. Let’s get started!

Prerequisites

Before we begin, ensure you have the following prerequisites in place:

- AWS Account: Ensure you have access to create and manage AWS resources.

- Basic Knowledge of Node.js: Familiarity with JavaScript and Node.js will help you understand the Lambda function code.

Once you have these prerequisites ready, proceed with the workflow setup.

Understanding AWS Lambda Triggers and Events

What are the Triggers in AWS Lambda?

AWS lambda triggers are configurations that enable the Lambda function to execute in response to specific events. These events are generated by AWS services (e.g., S3, DynamoDB, API Gateway, etc) or external applications integrated through services like Amazon EventBridge.

For example:

- Uploading a file to an S3 bucket can trigger a Lambda function to process the file.

- Changes in a DynamoDB table can trigger Lambda to perform additional computations or send notifications.

How do Events work in AWS Lambda?

When a trigger is activated, it generates an event–a structured JSON document containing details about what occurred Lambda receives this event as input to execute its function.

Example event from an S3 trigger:

{

"Records": [

{

"eventSource": "aws:s3",

"eventName": "ObjectCreated:Put",

"s3": {

"bucket": {

"name": "demo-upload-bucket"

},

"object": {

"key": "example-file.txt"

}

}

}

]

}

Popular Triggers in AWS Lambda

Here’s a list of some of the most commonly used triggers:

- Amazon S3:

- Use case: Process file uploads.

- Example: Resize images, extract metadata, or move files between buckets.

- Amazon DynamoDB Streams:

- Use case: React to data changes in a DynamoDB table.

- Example: Propagate updates or analyze new entries.

- Amazon API Gateway:

- Use case: Build REST or WebSocket APIs.

- Example: Process user input or return dynamic data.

- Amazon EventBridge:

- Use case: React to application or AWS service events.

- Example: Trigger Lambda for scheduled jobs or custom events.

- Amazon SQS:

- Use case: Process messages asynchronously.

- Example: Decouple microservices with a message queue.

- Amazon Kinesis:

- Use case: Process real-time streaming data.

- Example: Analyze logs or clickstream data.

- AWS IoT Core:

- Use case: Process messages from IoT devices.

- Example: Analyze sensor readings or control devices.

By leveraging triggers and events, AWS Lambda enables you to automate complex workflows seamlessly.

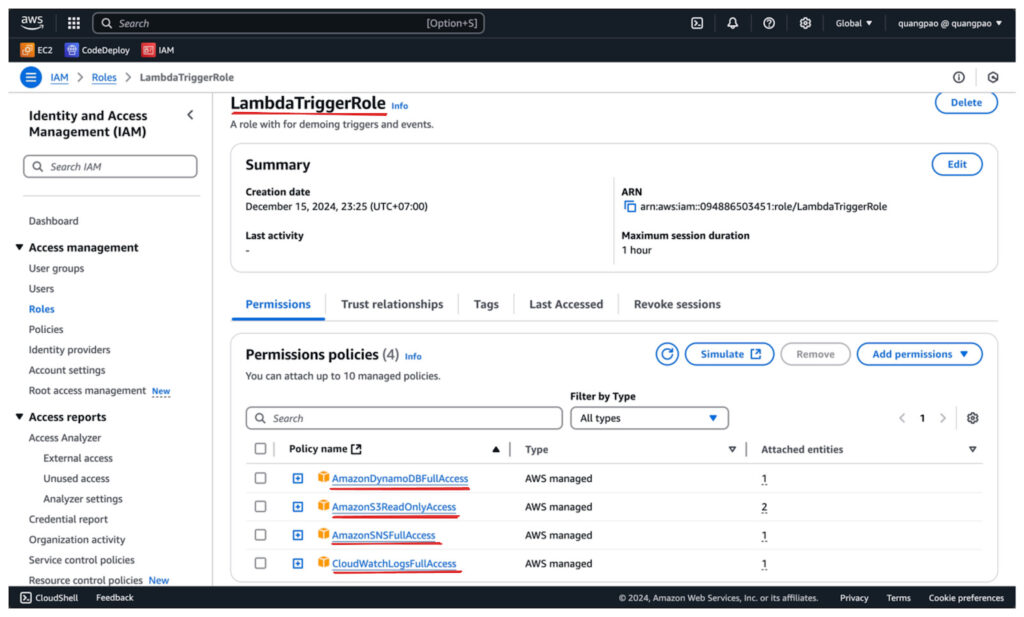

Setting Up IAM Roles (Optional)

Before setting up Lambda triggers, we need to configure an IAM role with the necessary permissions.

Step 1: Create an IAM Role

- Go to the IAM Console and click Create role.

- Select AWS Service → Lambda and click Next.

- Attach the following managed policies:

- AmazonS3ReadOnlyAccess: For reading files from S3.

- AmazonDynamoDBFullAccess: For writing metadata to DynamoDB and accessing DynamoDB Streams.

- AmazonSNSFullAccess: For publishing notifications to SNS.

- CloudWatchLogsFullAccess: For logging Lambda function activity.

- Click Next and enter a name (e.g., LambdaTriggerRole).

- Click Create role.

Setting Up the Workflow

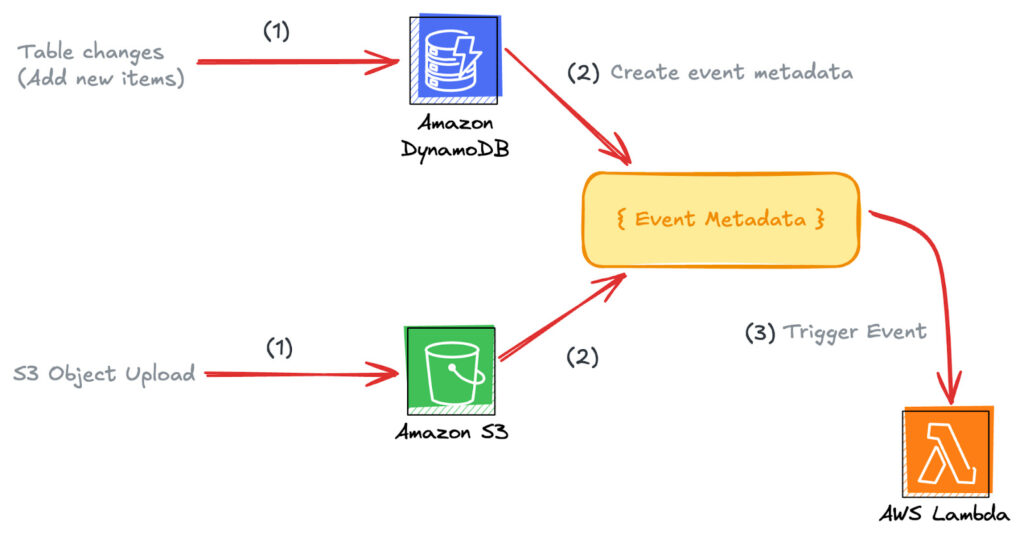

For this episode, we’ll create a simplified two-trigger workflow:

- S3 Trigger: Processes uploaded files and stores metadata in DynamoDB.

- DynamoDB Streams Triggers: Sends a notification via SNS when new metadata is added.

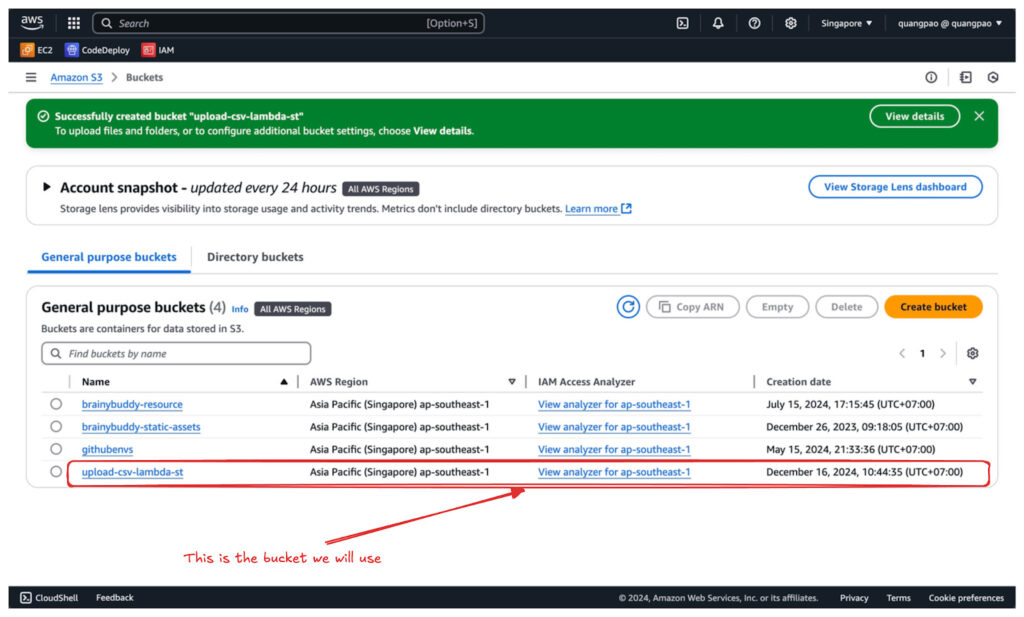

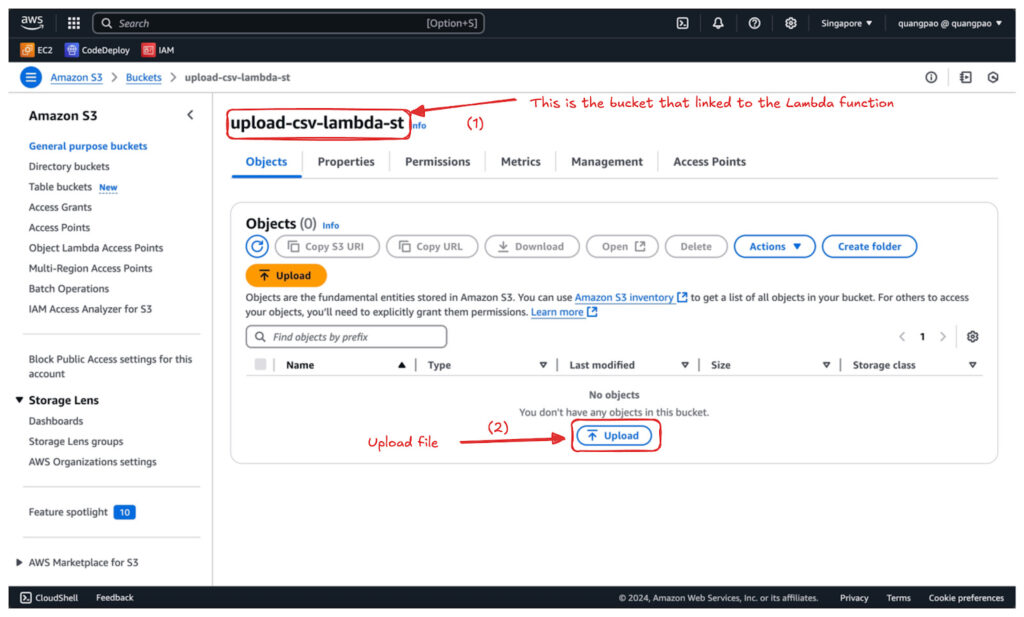

Step 1: Create an S3 Bucket

- Open the S3 Console in AWS.

- Click Create bucket and configure:

- Bucket name: Enter a unique name (e.g., upload-csv-lambda-st)

- Region: Choose your preferred region. (I will go with ap-southeast-1)

- Click Create bucket.

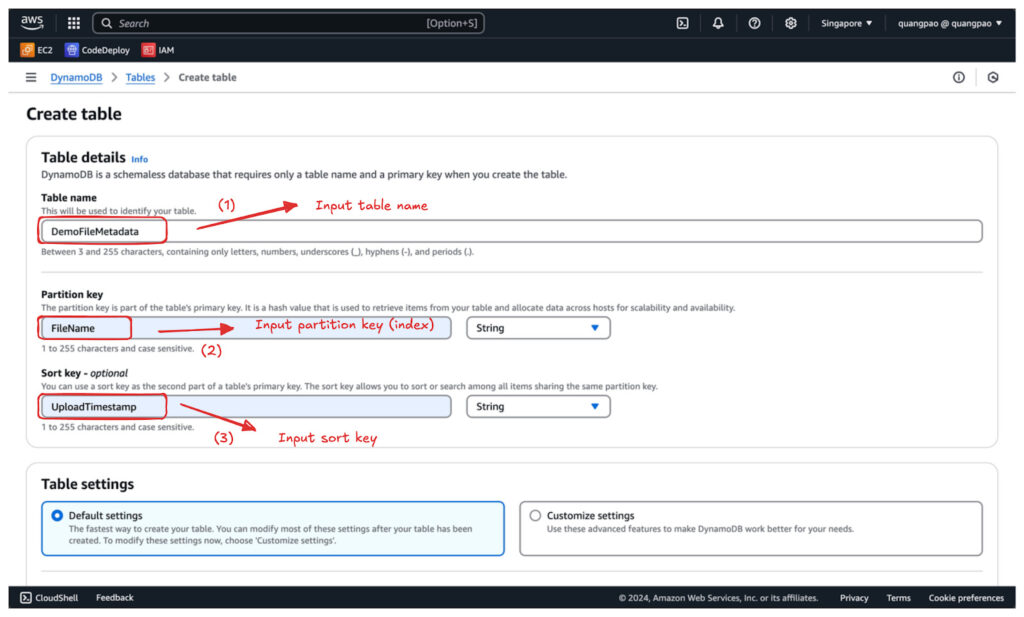

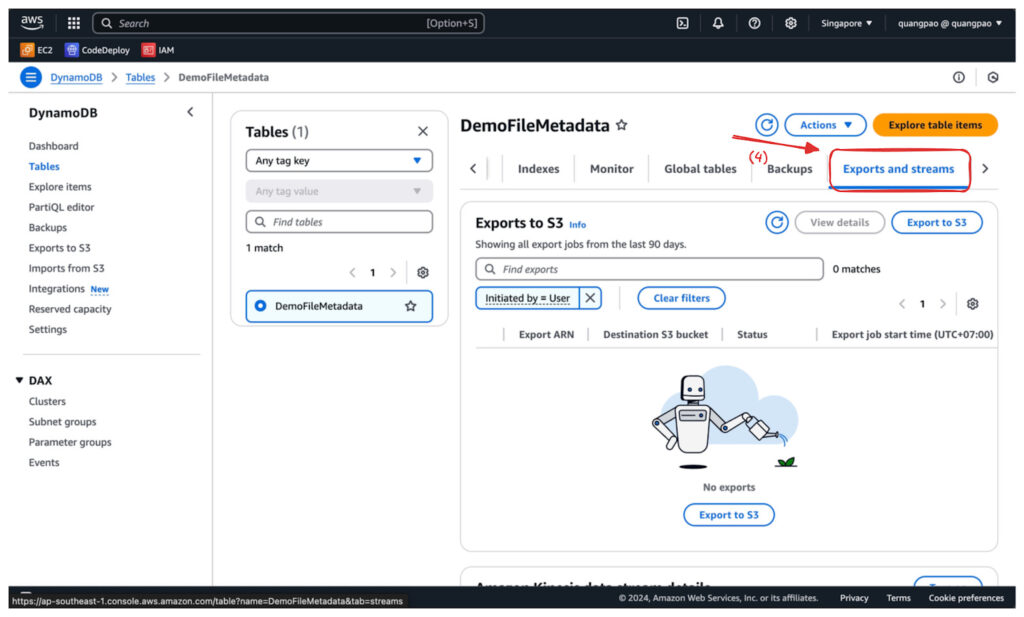

Step 2: Create a DynamoDB Table

- Navigate to the DynamoDB Console.

- Click Create table and configure:

- Table name: DemoFileMetadata.

- Partition key: FileName (String).

- Sort key: UploadTimestamp (String).

- Click Create table.

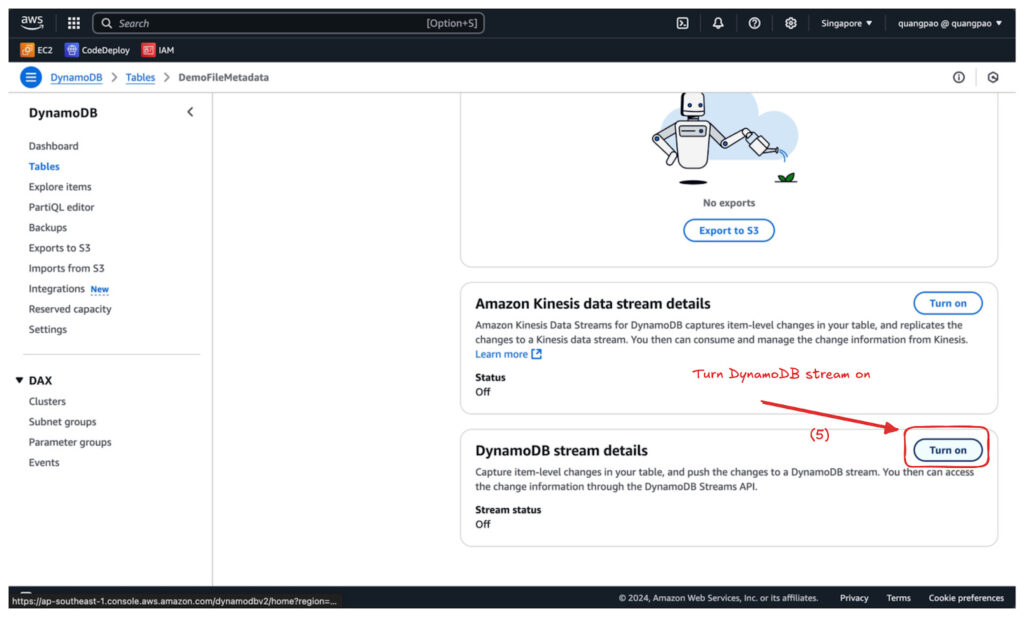

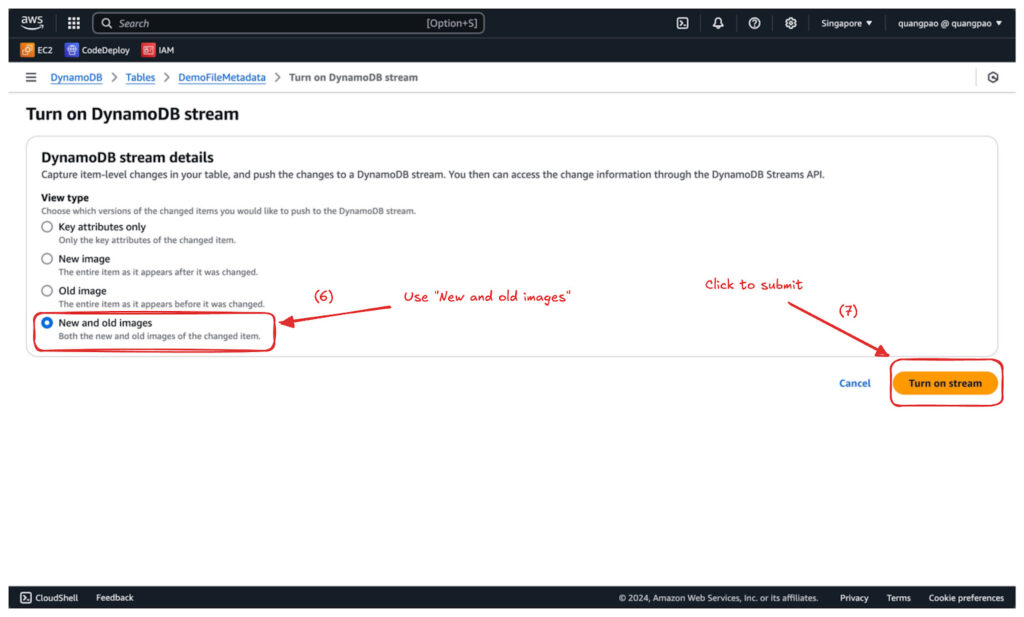

- Enable DynamoDB Streams with the option New and old images.

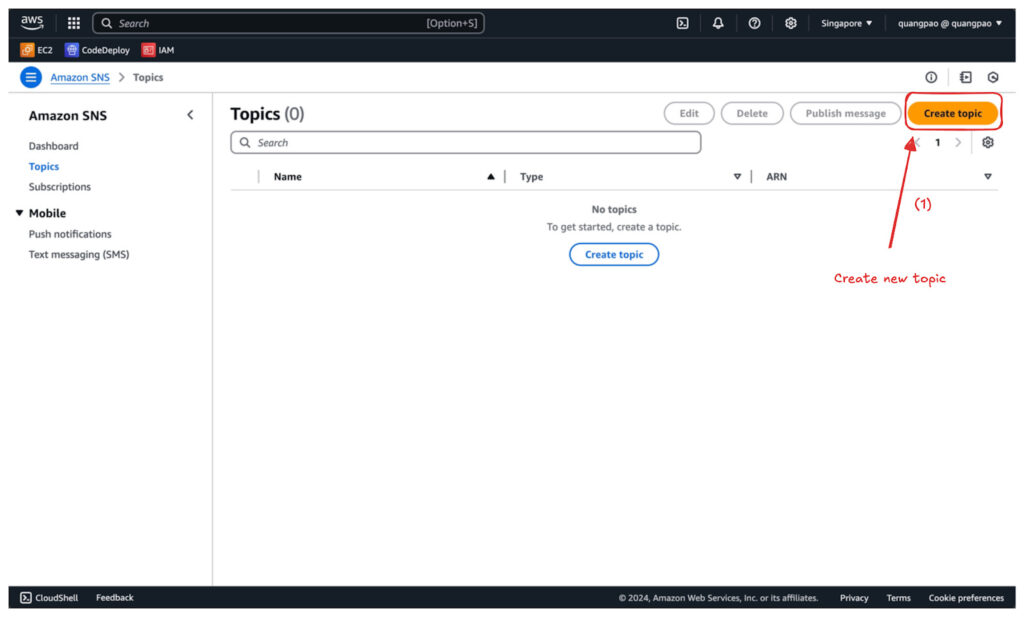

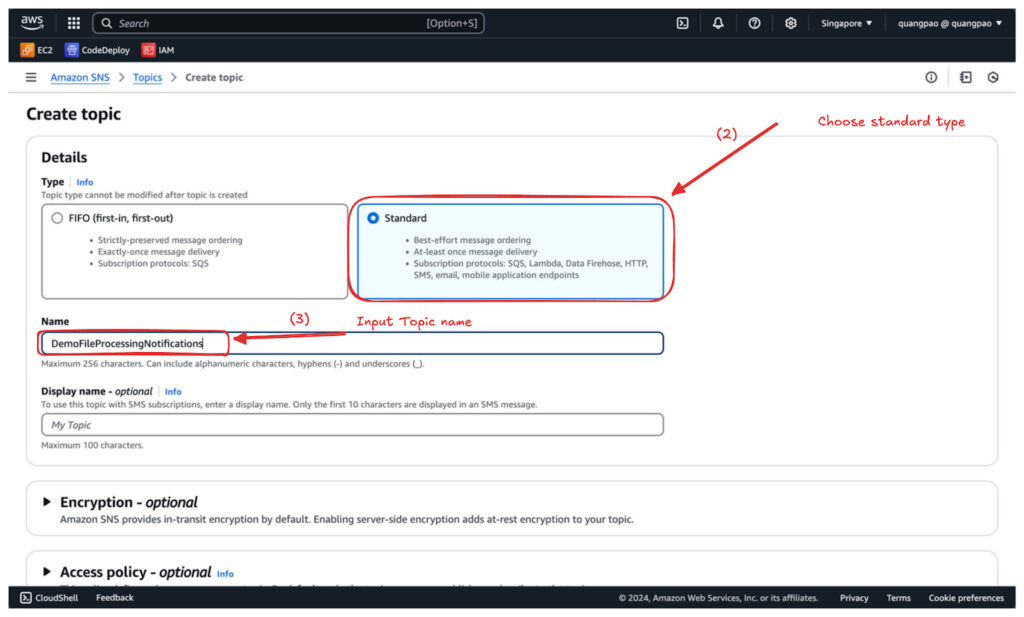

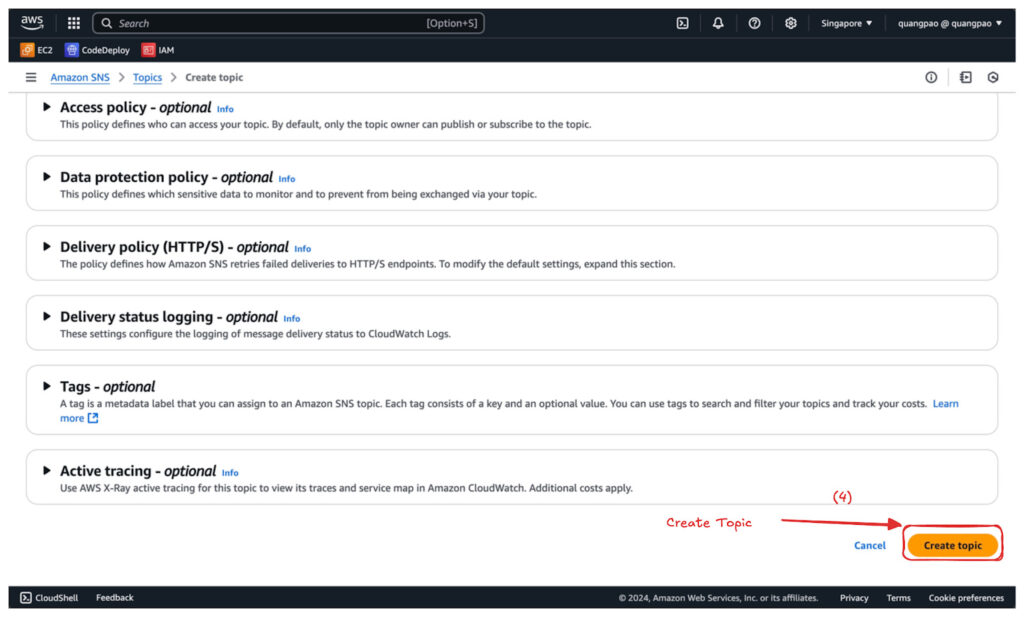

Step 3: Create an SNS Topic

- Navigate to the SNS Console.

- Click Create topic and configure:

- Topic type: Standard.

- Name: DemoFileProcessingNotifications.

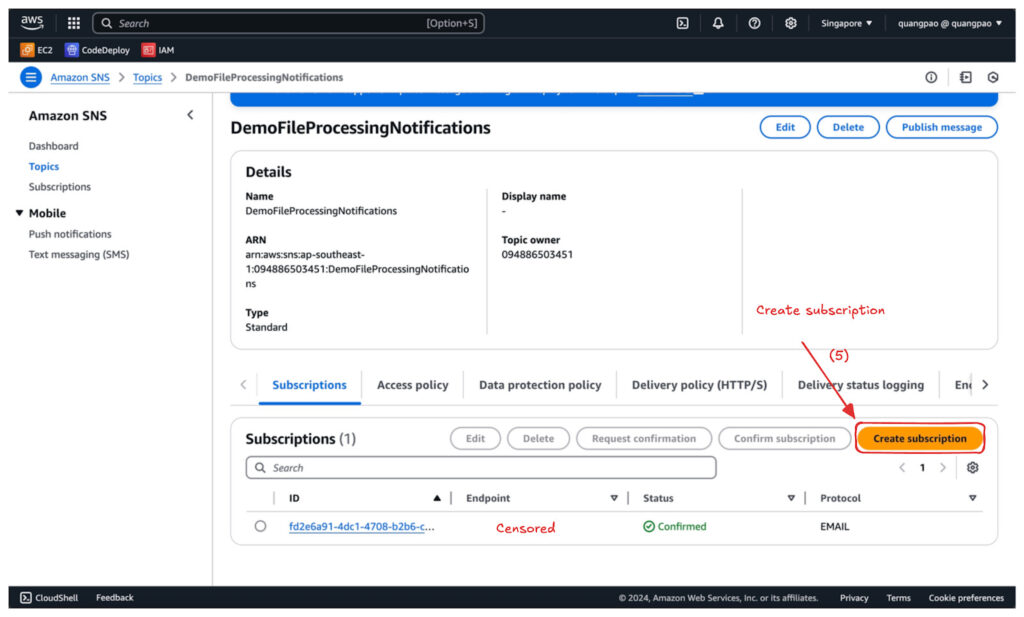

- Click Create topic.

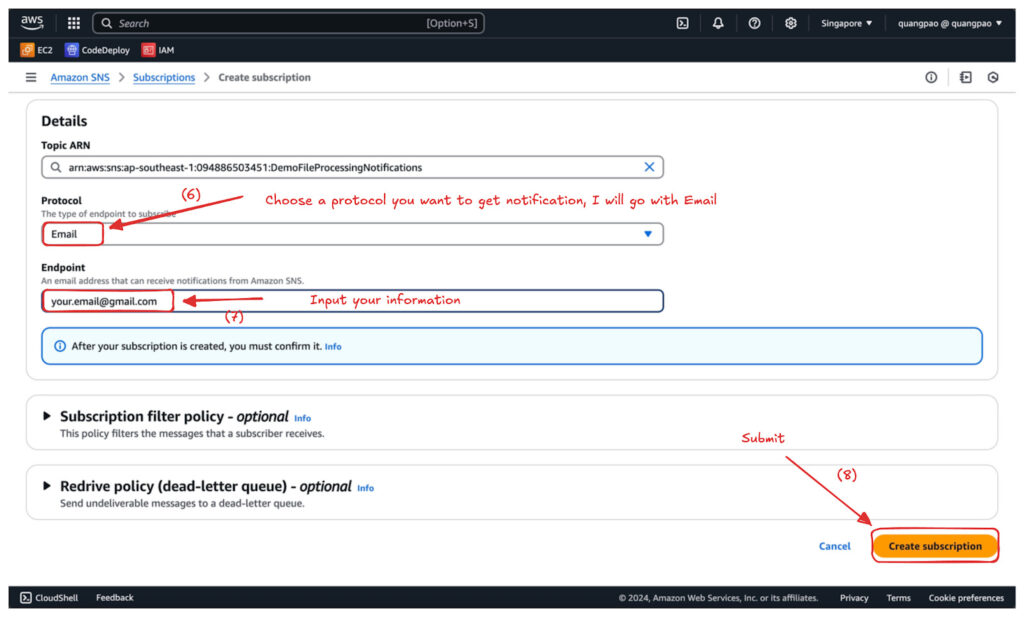

- Create a subscription.

- Confirm (in my case will be sent to my email).

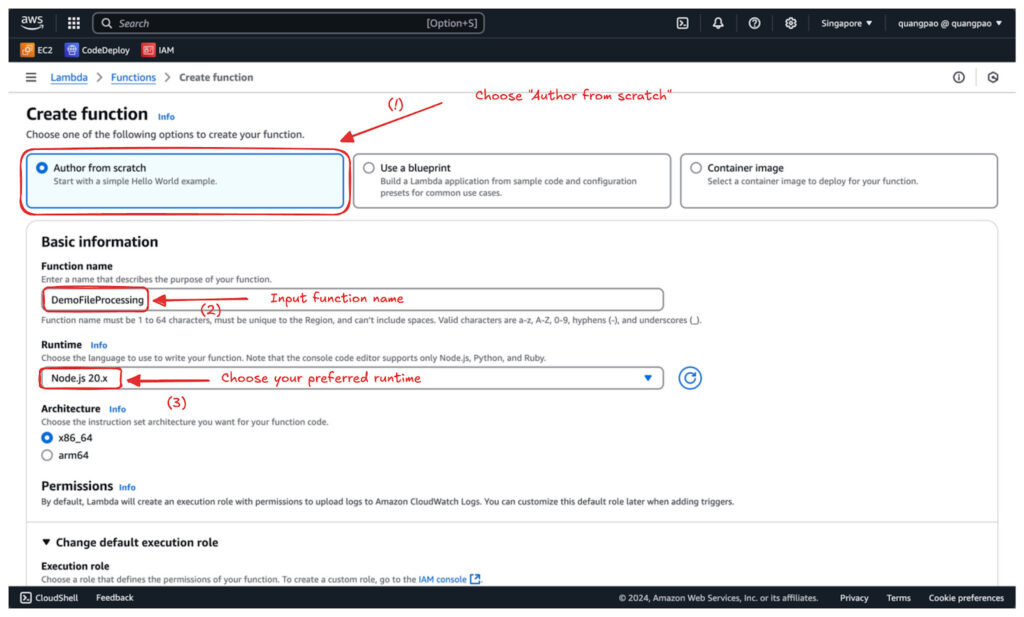

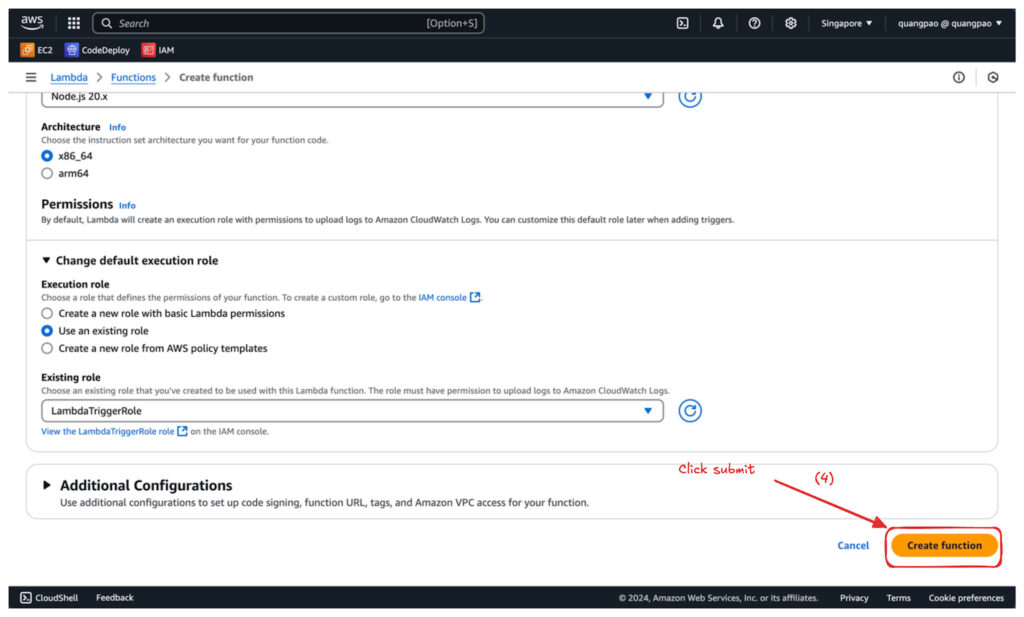

Step 4: Create a Lambda Function

- Navigate to the Lambda Console and click Create function.

- Choose Author from scratch and configure:

- Function name: DemoFileProcessing.

- Runtime: Select Node.js 20.x (Or your preferred version).

- Execution role: Select the LambdaTriggerRole you created earlier.

- Click Create function.

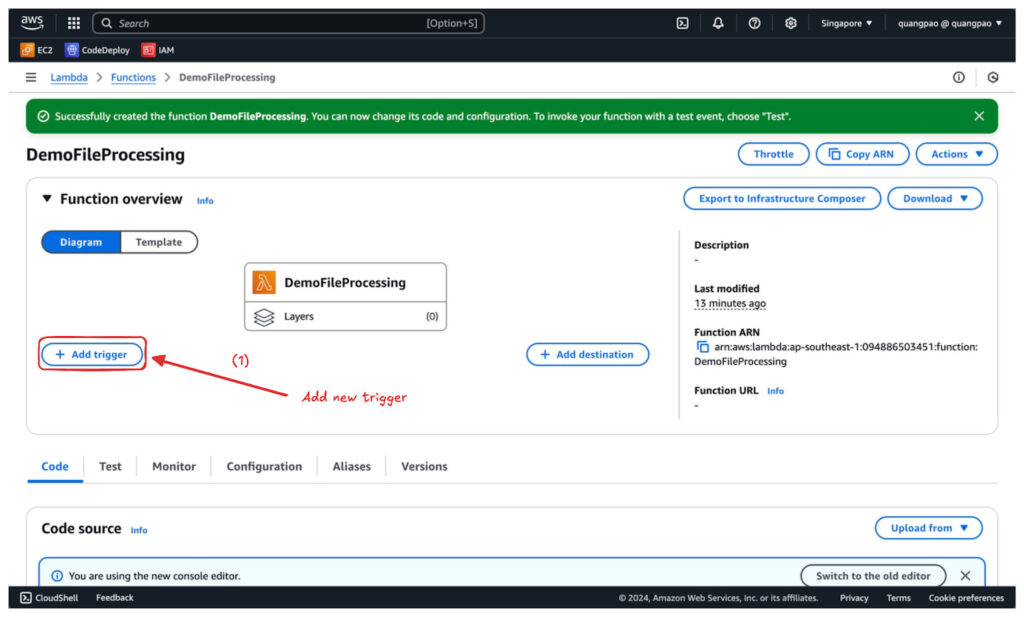

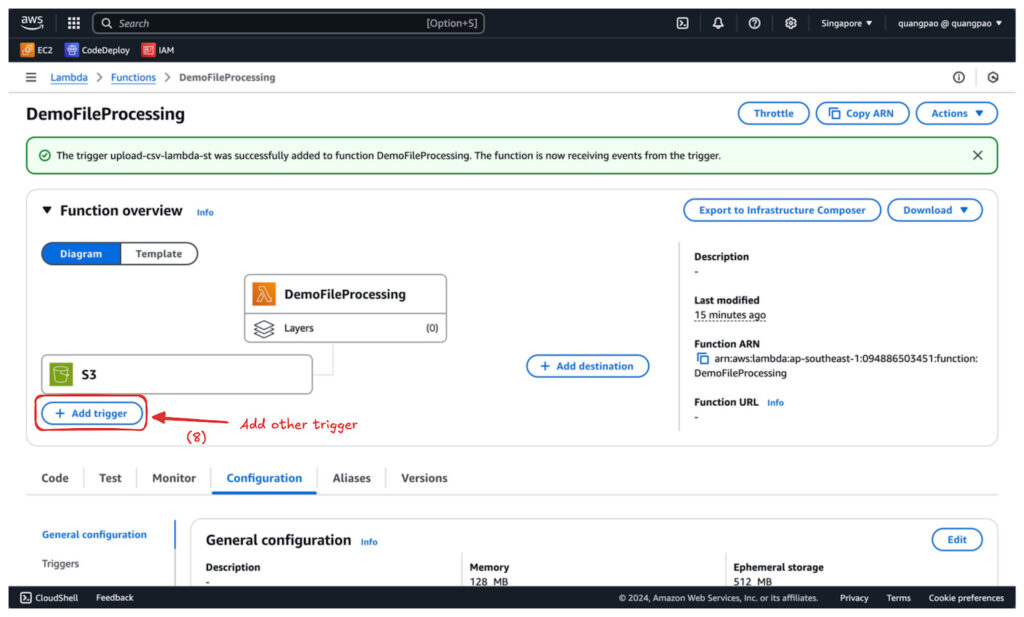

Step 5: Configure Triggers

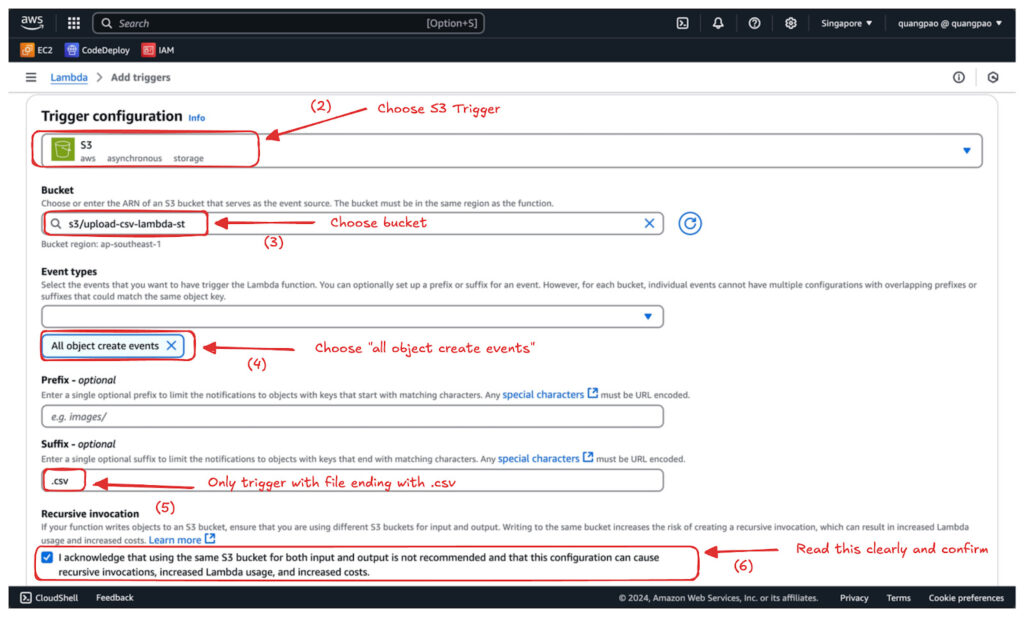

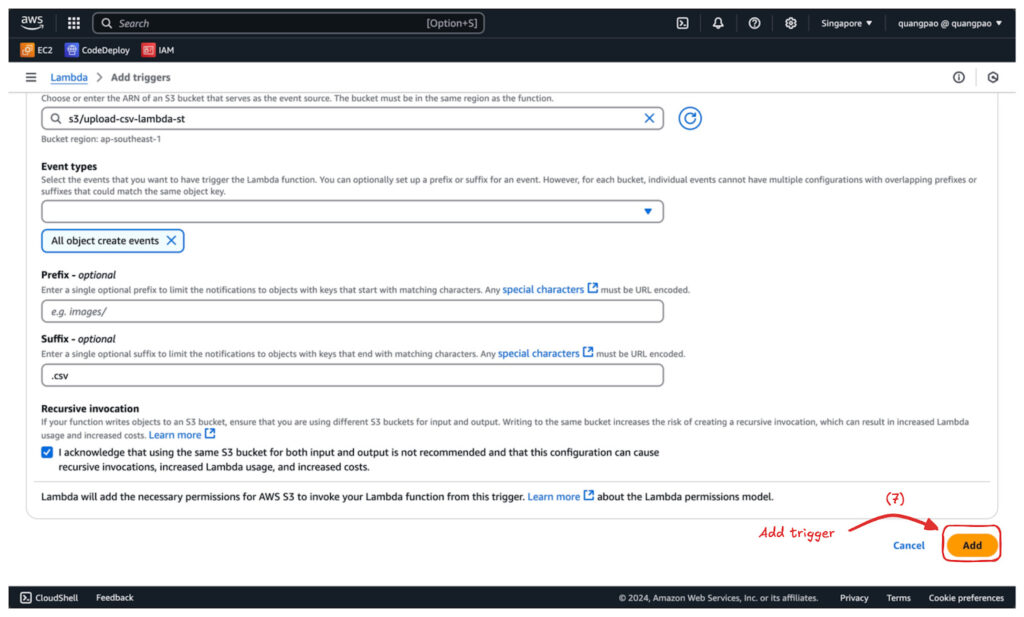

- Add S3 Trigger:

- Scroll to the Function overview section and click Add trigger.

- Select S3 and configure:

- Bucket: Select upload-csv-lambda-st.

- Event type: Choose All object create events.

- Suffix: Specify .csv to limit the trigger to CSV files.

- Click Add.

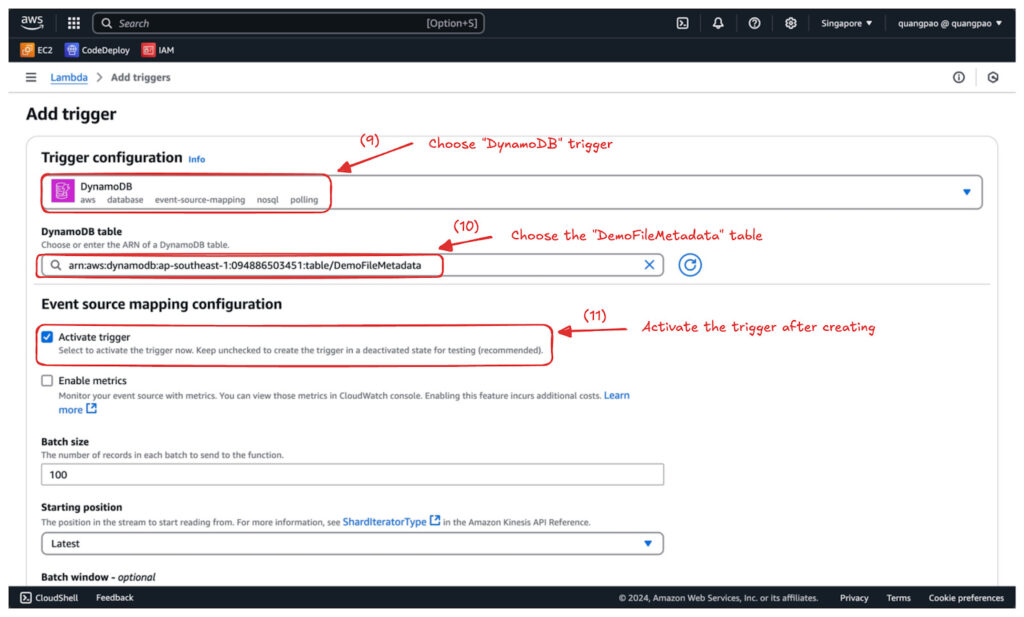

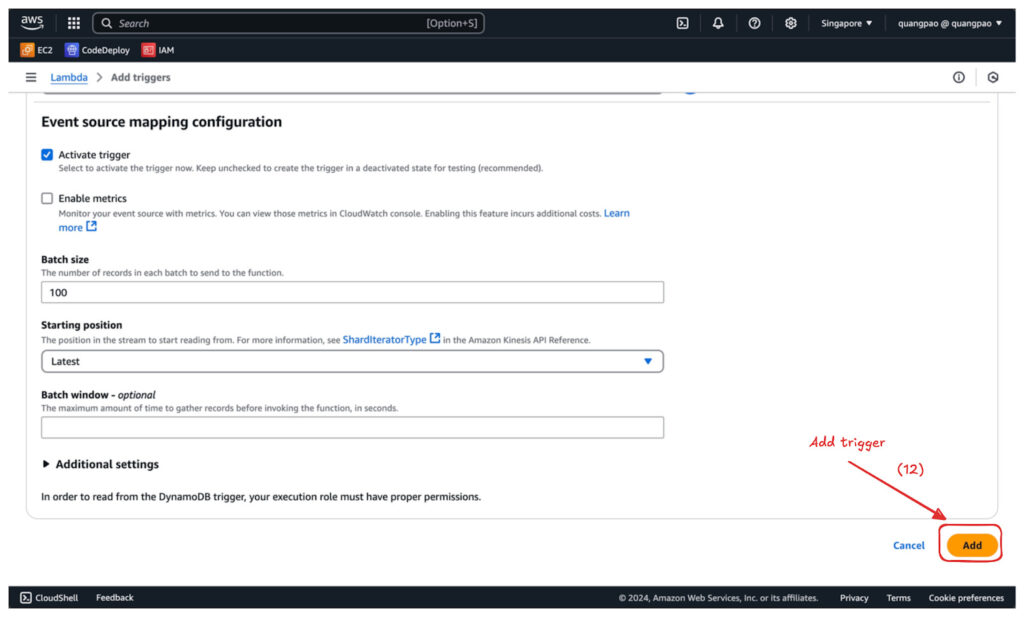

- Add DynamoDB Streams Trigger:

- Scroll to the Function overview section and click Add trigger.

- Select DynamoDB and configure:

- Table: Select DemoFileMetadata.

- Click Add.

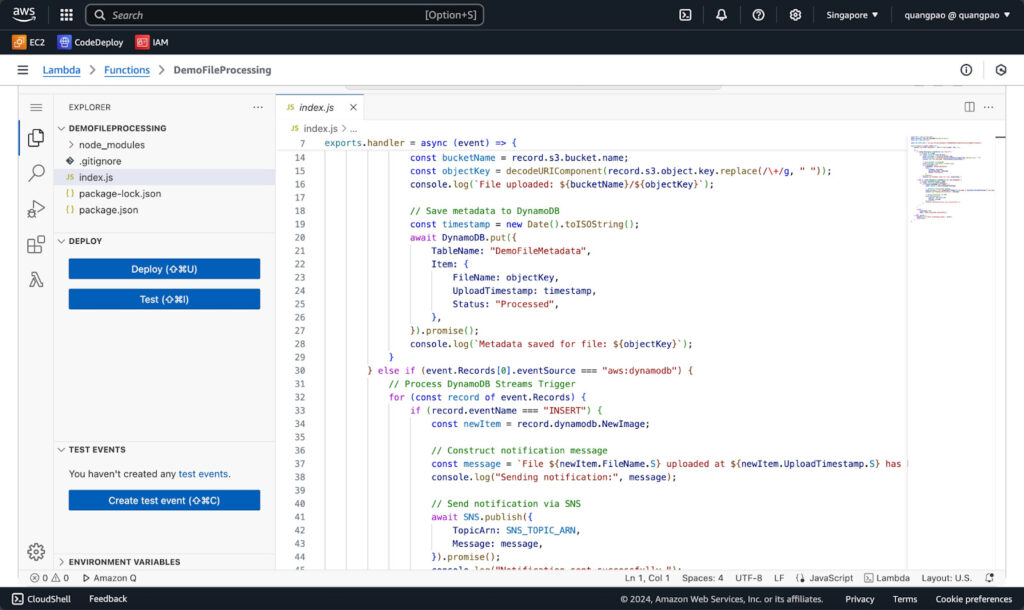

Writing the Lambda Function

Below is the detailed breakdown of the Node.js Lambda function that handles events from S3 and DynamoDB Streams triggers (Source code).

const AWS = require("aws-sdk");

const S3 = new AWS.S3();

const DynamoDB = new AWS.DynamoDB.DocumentClient();

const SNS = new AWS.SNS();

const SNS_TOPIC_ARN = "arn:aws:sns:region:account-id:DemoFileProcessingNotifications";

exports.handler = async (event) => {

console.log("Event Received:", JSON.stringify(event, null, 2));

try {

if (event.Records[0].eventSource === "aws:s3") {

// Process S3 Trigger

for (const record of event.Records) {

const bucketName = record.s3.bucket.name;

const objectKey = decodeURIComponent(record.s3.object.key.replace(/\+/g, " "));

console.log(`File uploaded: ${bucketName}/${objectKey}`);

// Save metadata to DynamoDB

const timestamp = new Date().toISOString();

await DynamoDB.put({

TableName: "DemoFileMetadata",

Item: {

FileName: objectKey,

UploadTimestamp: timestamp,

Status: "Processed",

},

}).promise();

console.log(`Metadata saved for file: ${objectKey}`);

}

} else if (event.Records[0].eventSource === "aws:dynamodb") {

// Process DynamoDB Streams Trigger

for (const record of event.Records) {

if (record.eventName === "INSERT") {

const newItem = record.dynamodb.NewImage;

// Construct notification message

const message = `File ${newItem.FileName.S} uploaded at ${newItem.UploadTimestamp.S} has been processed.`;

console.log("Sending notification:", message);

// Send notification via SNS

await SNS.publish({

TopicArn: SNS_TOPIC_ARN,

Message: message,

}).promise();

console.log("Notification sent successfully.");

}

}

}

return {

statusCode: 200,

body: "Event processed successfully!",

};

} catch (error) {

console.error("Error processing event:", error);

throw error;

}

};

Detailed Explanation

- Importing Required AWS SDK Modules

const AWS = require("aws-sdk");

const S3 = new AWS.S3();

const DynamoDB = new AWS.DynamoDB.DocumentClient();

const SNS = new AWS.SNS();

- AWS SDK: Provides tools to interact with AWS services.

- S3 Module: Used to interact with the S3 bucket and retrieve file details.

- DynamoDB Module: Used to store metadata in the DynamoDB table.

- SNS Module: Used to publish messages to the SNS topic.

- Defining the SNS Topic ARN

const SNS_TOPIC_ARN = "arn:aws:sns:region:account-id:DemoFileProcessingNotifications";

- This is the ARN of the SNS topic where notification will be sent. Replace it with the ARN of your actual topic.

- Handling the Lambda Event

exports.handler = async (event) => {

console.log("Event Received:", JSON.stringify(event, null, 2));

- The event parameter contains information about the trigger that activated the Lambda function.

- The event can be from S3 or DynamoDB Streams.

- The event is logged for debugging purposes.

- Processing the S3 Trigger

if (event.Records[0].eventSource === "aws:s3") {

for (const record of event.Records) {

const bucketName = record.s3.bucket.name;

const objectKey = decodeURIComponent(record.s3.object.key.replace(/\+/g, " "));

console.log(`File uploaded: ${bucketName}/${objectKey}`);

- Condition: Checks if the event source is S3.

- Loop: Iterates over all records in the S3 event.

- Bucket Name and Object Key: Extracts the bucket name and object key from the event.

- decodeURIComponent() is used to handle special characters in the object key.

- Saving Metadata to DynamoDB

const timestamp = new Date().toISOString();

await DynamoDB.put({

TableName: "DemoFileMetadata",

Item: {

FileName: objectKey,

UploadTimestamp: timestamp,

Status: "Processed",

},

}).promise();

console.log(`Metadata saved for file: ${objectKey}`);

- Timestamp: Captures the current time as the upload timestamp.

- DynamoDB Put Operation:

- Writes the file metadata to the

DemoFileMetadatatable. - Includes the

FileName,UploadTimestamp, andStatus.

- Writes the file metadata to the

- Promise: The

putmethod returns a promise, which is awaited to ensure the operation is completed.

- Processing the DynamoDB Streams Trigger

} else if (event.Records[0].eventSource === "aws:dynamodb") {

for (const record of event.Records) {

if (record.eventName === "INSERT") {

const newItem = record.dynamodb.NewImage;

- Condition: Checks if the event source is DynamoDB Streams.

- Loop: Iterates over all records in the DynamoDB Streams event.

- INSERT Event: Filters only for INSERT operations in the DynamoDB table.

- Constructing and Sending the SNS Notification

const message = `File ${newItem.FileName.S} uploaded at ${newItem.UploadTimestamp.S} has been processed.`;

console.log("Sending notification:", message);

await SNS.publish({

TopicArn: SNS_TOPIC_ARN,

Message: message,

}).promise();

console.log("Notification sent successfully.");

- Constructing the Message:

- Uses the file name and upload timestamp from the DynamoDB Streams event.

- SNS Publish Operation:

- Send the constructed message to the SNS topic.

- Promise: The

publishmethod returns a promise, which is awaited. to ensure the message is sent.

- Error Handling

} catch (error) {

console.error("Error processing event:", error);

throw error;

}

- Any errors during event processing are caught and logged.

- The error is re-thrown to ensure it’s recorded in CloudWatch Logs.

- Lambda Function Response

return {

statusCode: 200,

body: "Event processed successfully!",

};

- After processing all events, the function returns a successful response.

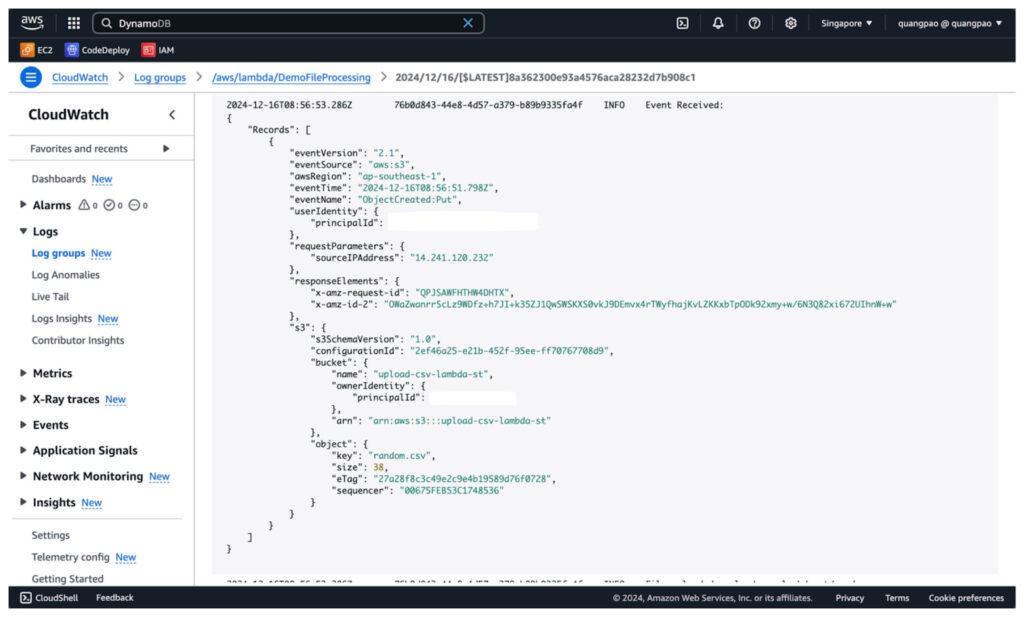

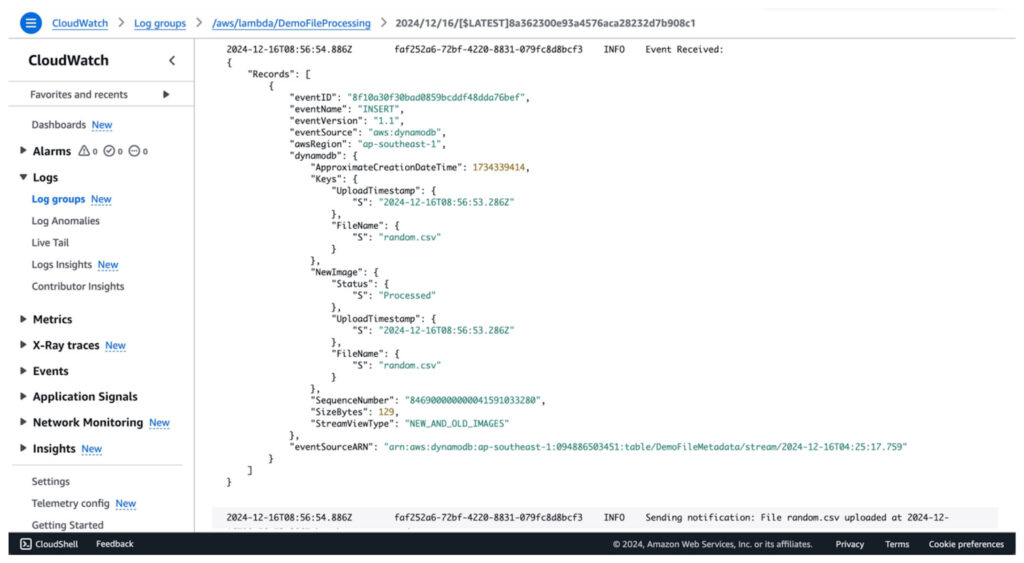

Test The Lambda Function

- Upload the code into AWS Lambda.

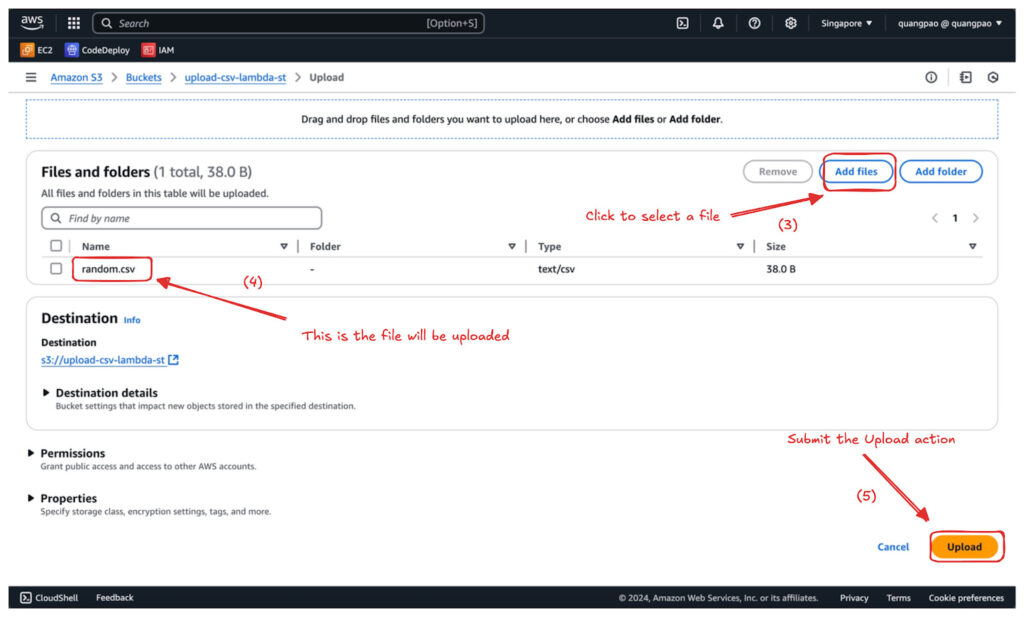

- Navigate to the S3 Console and choose the bucket you linked to the Lambda Function.

- Upload a random.csv file to the bucket.

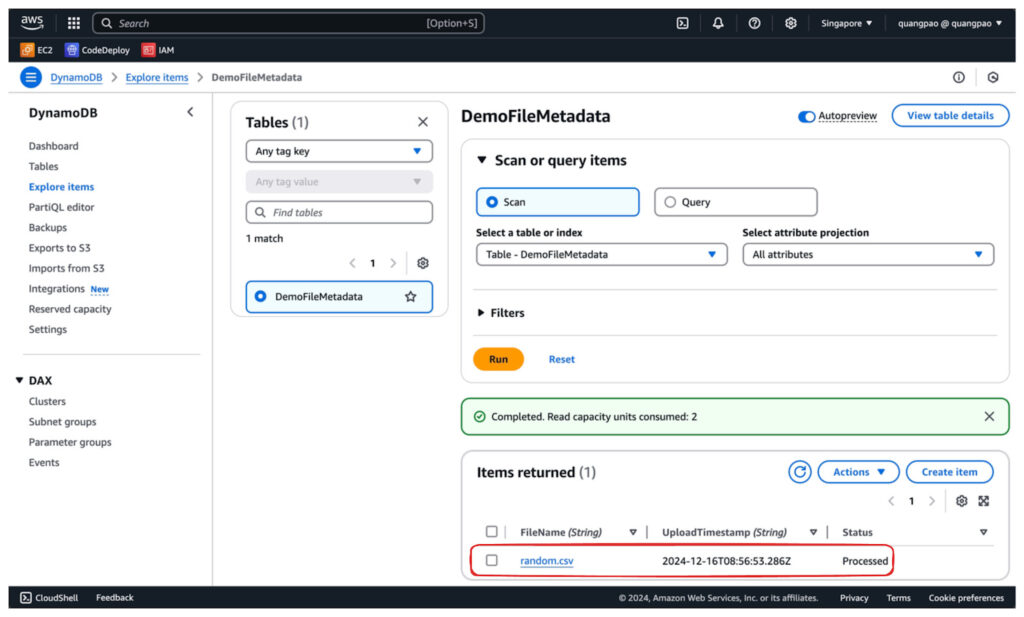

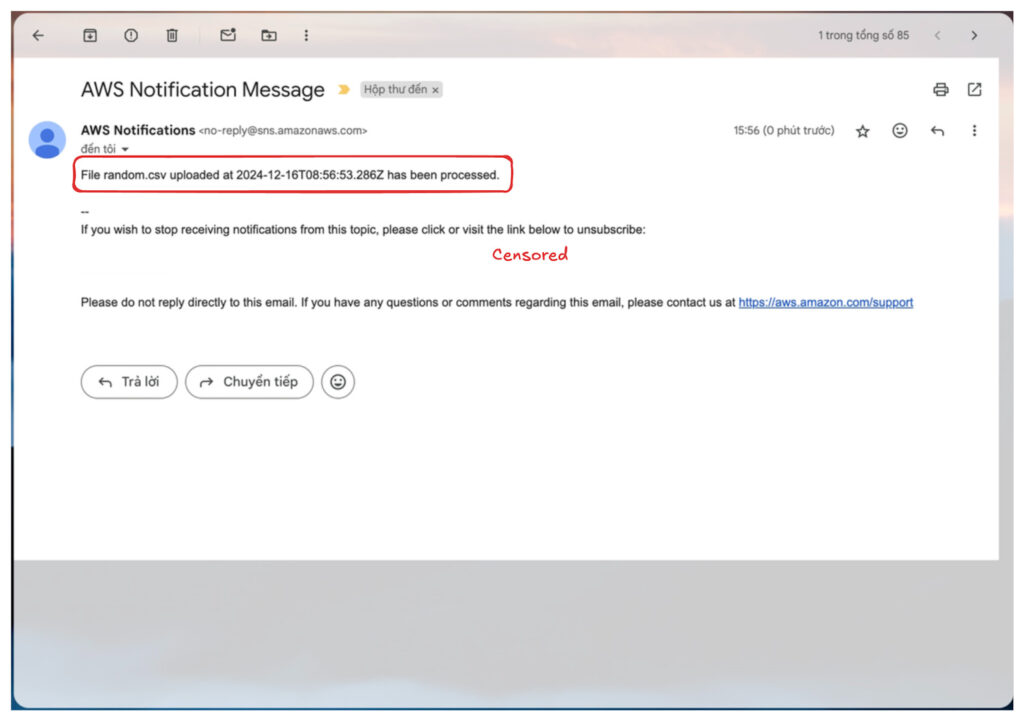

- Check the result:

- DynamoDB Table Entry

- SNS Notifications

- CloudWatch Logs

So, we successfully created a Lambda function that triggered based on 2 triggers. It’s pretty simple. Just remember to delete any services after use to avoid incurring unnecessary costs!

Conclusion

In this episode, we explored AWS Lambda’s foundational concepts of triggers and events. Triggers allow Lambda functions to respond to specific actions or events, such as file uploads to S3 or changes in a DynamoDB table. In contrast, events are structured data passed to the Lambda function containing details about what triggered it.

We also implemented a practical example to demonstrate how a single Lambda function can handle multiple triggers:

- An S3 trigger processed uploaded files by extracting metadata and saving it to DynamoDB.

- A DynamoDB Streams trigger sent notifications via SNS when new metadata was added to the table.

This example illustrated the flexibility of Lambda’s event-driven architecture and how it integrates seamlessly with AWS services to automate workflows. In the next episode, we’ll discuss Best practices for Optimizing AWS Lambda Functions, optimizing performance, handling errors effectively, and securing your Lambda functions. Stay tuned to continue enhancing your serverless expertise!

Related Blog