A to Z about Shopify App Bridge

22/04/2024

1.19k

Table of Contents

Howdy, tech fellows! It’s Linh again for the SupremeTech’s blog series. You may know this or not, but we do provide different solutions for Shopify-based businesses. That’s why Shopify topics are among the most common you will see here. If interested in growing your business with Shopify/Shopify Plus, don’t miss out. This article is all about Shopify App Bridge, from a non-technical point of view.

What is Shopify App Bridge?

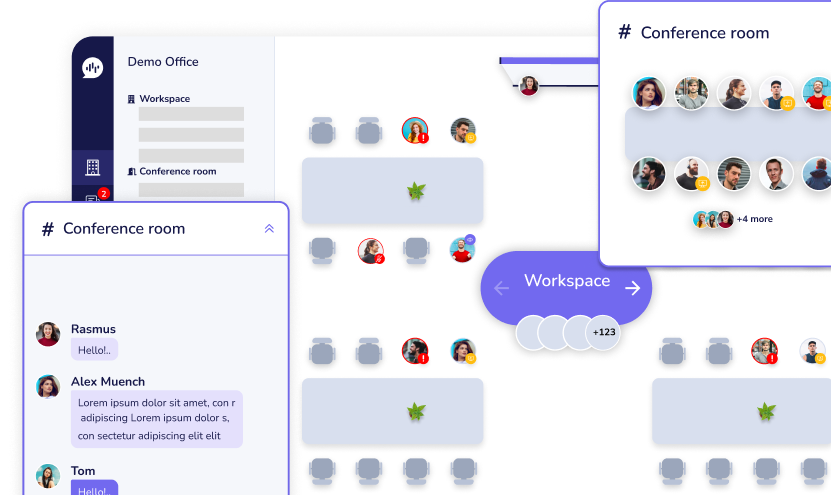

Shopify App Bridge is a framework provided by Shopify that allows developers to create embedded applications within the Shopify ecosystem. In short, it helps you build, connect, publish apps that can be customized to your specific needs. It essentially serves as a bridge between third-party apps and the Shopify platform, enabling developers to seamlessly integrate their apps into the Shopify Admin interface.

Why Shopify App Bridge?

It is apparent that if you want to succeed on the platform, you must play by its rules. Shopify App Bridge allows you to add some custom tricks while keeping the rules. Here are some common features, offered by this framework, which make you manage your stores better with less effort.

- Embedded App Experiences: Merchants can access and interact with third-party app functionalities without leaving their Shopify dashboard.

- Enhancing Shopify Functionality: Adding custom features, automating tasks, or integrating with other services to streamline business operations.

- Customizing Shopify Admin Interface: Merchants can tailor their dashboard to their specific needs and preferences, improving efficiency and productivity.

- Cross-Platform Integration: It supports integration across various platforms, including web, mobile, and other third-party applications. This minimizes the effort spent when it comes to change in business strategy or platform migration.

- Improving User Experience: It eliminates the need for merchants to switch between different interfaces, leading to a more intuitive workflow. Therefore, the customers will be served faster.

- Enhanced Security: The bridge includes built-in security features to ensure that only authorized users and apps can access sensitive data within the Shopify ecosystem.

In short, Shopify App Bridge offers tools for store customization beyond your wildest imagination.

Is it exclusive for developers?

Primarily, yes. For a highly-customized solution for large-scale business, maybe yes. However, its use extends to several other groups within the Shopify ecosystem:

- Shopify Merchants: Merchants who use the Shopify platform can benefit from apps built with Shopify App Bridge. These apps enhance the functionality of their Shopify stores, offering additional features, automating tasks, and improving the overall user experience.

- Shopify Partners: Shopify Partners, including agencies and freelancers, can utilize Shopify App Bridge to create custom solutions for their clients. By building embedded applications tailored to their clients’ specific needs, Shopify Partners can provide added value and differentiate their services.

- Third-Party App Developers: Developers who create apps for the Shopify App Store can use Shopify App Bridge to enhance their app’s integration with the Shopify platform. By embedding their apps directly within the Shopify Admin, they can provide a more seamless experience for merchants using their products.

- E-commerce Solution Providers: Companies that offer e-commerce solutions or services can leverage Shopify App Bridge to integrate their offerings with the Shopify platform. This allows them to provide their clients with a more comprehensive and integrated solution for managing their online stores.

Key features of Shopify App Bridge

Some of its primary features include:

- Embedded App Experiences: Shopify App Bridge enables developers to build apps that seamlessly integrate with the Shopify Admin interface. These embedded apps appear directly within the Shopify dashboard, providing merchants with a cohesive and intuitive user experience.

- UI Components: The framework provides a library of UI components that developers can use to create consistent and visually appealing interfaces for their embedded apps. These components maintain the look and feel of the Shopify platform, ensuring a seamless user experience.

- App Persistence: Apps built with Shopify App Bridge can maintain state and context across different pages and interactions within the Shopify Admin. This allows for a smoother user experience, as merchants can seamlessly navigate between different app functionalities without losing their progress.

- Cross-Platform Compatibility: Shopify App Bridge supports integration across various platforms, including web, mobile, and other third-party applications. This ensures that merchants can access embedded app experiences regardless of the device or platform they are using.

- Enhanced Security: The framework includes built-in security features to ensure that only authorized users and apps can access sensitive data within the Shopify ecosystem. This helps to protect merchants’ information and maintain the integrity of the platform.

- App Bridge Action: App Bridge Action is a feature that allows developers to perform actions within Shopify Admin, such as navigating to specific pages or performing tasks, directly from their embedded apps. This helps to streamline workflows and improve efficiency for merchants.

- App Bridge APIs: Shopify App Bridge provides a set of APIs that developers can use to interact with the Shopify platform and access various functionalities, such as fetching data, managing orders, and updating settings. These APIs enable developers to build robust and feature-rich embedded applications.

Conclusion

In a nutshell, Shopify App Bridge is a game-changer for developers looking to jazz up Shopify stores. With its cool features like embedded apps, user-friendly UI bits, and the ability to keep things running smoothly even as you hop around the store, it’s like the Swiss Army knife of Shopify customization. Plus, it’s got your back on security, making sure only the right peeps get access to the good stuff. So, whether you’re a developer dreaming up the next big thing or a merchant wanting to spruce up your online digs, Shopify App Bridge has got you covered, making your Shopify journey a breeze!

If you are finding a way to boost up your business on Shopify, maybe we can help! Whether it’s a Shopify custom development services for large-scale businesses or Shopify custom apps for individual request, we are confident to offer.

Related Blog