5 Tips For Staying Motivated While Working From Home

23/07/2021

1.87k

How’s your day been so far? We hope all of you’re doing well and still stay healthy and safe.

With regard to the COVID outbreak in Da Nang, it looks like most of us will be working from home for the foreseeable future.

Whether you’re home alone and the house is too quiet, or you’re home with the family and the kids are out of control, you may find it’s tough to stay on task, get your work done, and feel productive.

According to one survey, 91% per cent of employees say they’ve experienced moderate to extreme stress while working from home during the pandemic.

So how could we deal with those distractions? How could we stay focused to help maintain productivity while working from home? And How to keep ourselves happy while working from home?

Here are some tips that can help you stay motivated when you work from home.

1. Get dressed

Pajamas and a comfortable seat on the sofa just don’t provide the same type of motivation you get from a suit and an office chair, right? Then how about taking a shower and getting dressed beautifully like you’re going to rock “the office runway”. Clothes have a strong psychological impact on motivation when we work from home so just change into something that signals to your brain that it’s time to work.

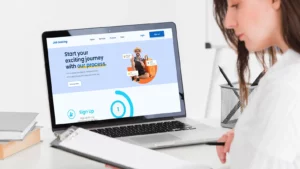

2. Create your own dedicated workspace

Not everyone has a home office and you might be tempted to work in bed. But when you associate your bed with work, it will affect your performance for the whole day. Believe me, reserving your bed only for sleep and … sexual activity, guys.

The kitchen table or a desk beside a shining window of the living room might be better alternatives to your bedroom. Oh and it would be even better if you could find a room where you can actually shut the door when you’re working.

3. Start working with a to do list

Writing out your to do list every morning will make your day look less messing, more manageable and help you stay focused on each task. It also helps to track performance more effectively.

It is really tempting to try to multitask at home, but you’re actually more productive if you focus on one thing at a time.

4. Take breaks

Breaks can help IMMENSELY if you work from home! A 5 or 7 minute break every hour can really boost your energy. At that time, you can focus on an activity that allows you to disconnect from your computer mentally and physically such as: take a short walk, stretch, meditate, eat a healthy snack, or cuddle your pet.

5. Healthy eating

Make sure you actually eat and drink plenty of water. You’ll never be at your best if you’re exhausted and running on caffeine and sugar only. You need a healthy diet, plenty of rest, and good self-care strategies to perform at your peak. Working from home might be an ideal time to try some fast, fresh and exciting recipes. By focussing on maintaining a balanced diet, we can reap the health benefits no matter our surroundings. And inevitably improve work performance.

Conclusion

Remote work is the new normal for many people when COVID-19 has stopped 7 billions people from performing their work duties in offices. We all know it’s hard to stay focused but how about trying these tips to stay motivated? You may find that working from home can be fun, fulfilling, and highly productive. It can also be an opportunity for you to do your best work in the comfort of your home ^^.